Who ought to determine what can and can’t be shared on social platforms? Mark Zuckerberg? Elon Musk? The customers themselves?

Nicely, in response to a brand new examine performed by Pew Analysis, a slim majority of Individuals consider that social platforms ought to be regulated by the federal government.

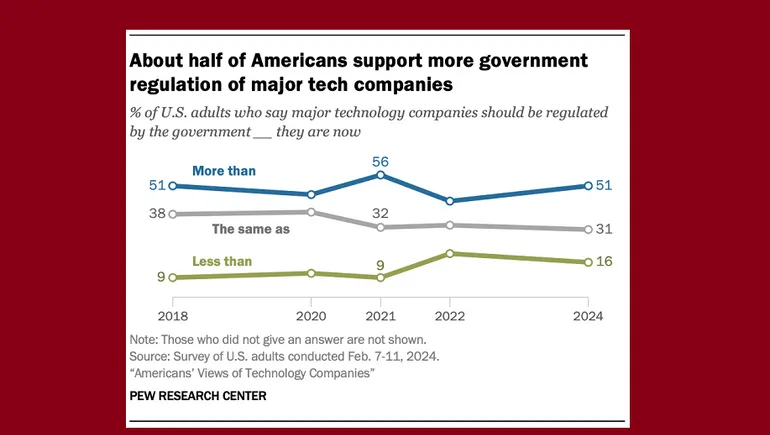

As you possibly can see on this chart, 51% of Individuals consider social platforms ought to be regulated by a authorities, government-appointed company, down from 56% who help it in 2021.

That was proper after the 2020 election, and the capital riots, and in that atmosphere, it is smart that issues in regards to the affect of social platforms on political discourse have grown.

However now, as we head into one other US election, these issues appear to have eased, though most individuals nonetheless suppose the federal government ought to do extra to police content material on social apps.

The query of social media regulation is advanced, as it’s not perfect for social media platform executives to dictate what can and can’t be shared on every app, there are regional variations and guidelines in place, making public content material tough.

For years, Metta has argued that there ought to be extra authorities regulation of social media, which might take away strain from his personal celebration to weigh crucial questions round speech. The Covid pandemic has exacerbated the talk surrounding this aspect, whereas the usage of social media by political candidates and the suppression of dissenting voices in sure areas, has put platform decision-makers in a troublesome place as to how they need to act.

Certainly, Meta, X and all of the platform police will not like person speech in any respect, however the expectations of advertisers, and authorities rules, imply they’re compelled to behave in some circumstances.

However actually, the choice should not come down to a couple circumstances within the board room, debating the deserves of sure speeches.

That is what Meta has tried to spotlight with its Oversight Board challenge, the place a panel of consultants is appointed to guage Meta’s moderation selections primarily based on person challenges.

The issue is, that group, whereas working independently, remains to be funded by Meta, which many would see as considerably biased. However then once more, any government-funded moderation group can be accused of the identical factor, relying on the present authorities, and whereas that would scale back the decision-making strain on every particular person platform, it would not cut back its scrutiny. materials

Possibly, then, Elon Musk is heading in the right direction, focusing as a substitute on crowd-sourced moderation, with neighborhood notes now enjoying a a lot greater position in X’s course of. This then allows individuals to determine what’s right and what’s not, what ought to be authorized and what ought to be disputed, and there’s proof that neighborhood notes have had a constructive impact on decreasing the unfold of misinformation on apps.

But, on the similar time, neighborhood notes usually are not presently in a position to scale to the extent essential to handle all content material issues, whereas notes can’t be added rapidly sufficient to cease the amplification of sure claims earlier than they take impact.

Possibly, upvoting and downvoting all posts might be one other resolution, which might velocity up the identical course of and rapidly crush the distribution of misinformation.

Nevertheless it can be gamed. And once more, authoritarian governments are already very lively in suppressing speech they do not like. Empowering them to make such calls won’t result in an improved course of.

That is why it is such a fancy challenge, and whereas there is a clear need from the general public for extra authorities oversight, you too can see why governments can be all in favour of staying away from the identical.

However they must take duty. If regulators implement guidelines that acknowledge the facility and affect of social platforms, they need to additionally look to implement moderation requirements alongside the identical traces.

Which means all questions on what’s permissible and what’s not are deferred to elected officers, and your help or opposition to it may be shared on the poll field.

That is under no circumstances an ideal resolution, nevertheless it appears higher than the present variation of the strategy.