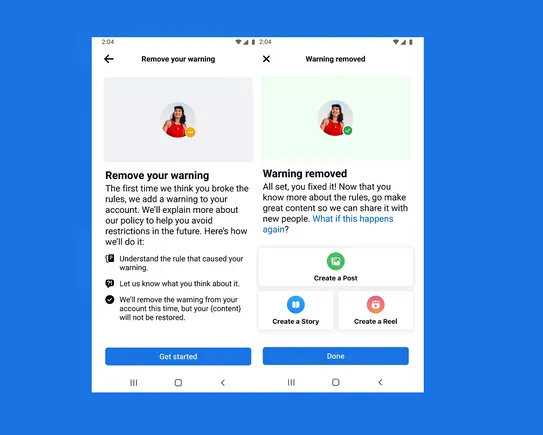

Meta is trying to assist creators keep away from penalties, by implementing a brand new system that can allow creators who violate Meta’s guidelines for the primary time to finish an training course of concerning the particular coverage in query with a purpose to have that warning eliminated.

In accordance with the meta:

“Now, the primary time a creator violates our Group Requirements, they will obtain a notification to finish an in-app instructional coaching concerning the coverage they violated. Upon completion, their warning shall be faraway from their document and in the event that they keep away from one other violation for one 12 months, they’ll take part within the “Take away Your Warning” expertise once more.

That is much like the method YouTube carried out final 12 months, which for the primary time enabled violators of Group Requirements to take a coaching course to keep away from channel strikes.

In each circumstances although, probably the most egregious violations will nonetheless end in a right away superb.

“Posting content material that’s sexually exploitative, sells high-risk medicine, or glorifies harmful organizations and people just isn’t eligible for warning elimination. We’ll take away content material that violates our insurance policies.“

So it isn’t a change in coverage, a lot as, simply in enforcement, offering a method to study from what could be an sincere mistake versus punishing those that break the foundations with restrictions.

Nevertheless, even if you happen to take these programs, if you’re in violation repeatedly inside a 12-month interval, you’ll nonetheless obtain an account penalty.

The choice would give producers extra flexibility, and goals to assist enhance understanding, versus a extra heavy-handed enforcement method. This is among the foremost suggestions of Meta’s Impartial Oversight Board, which works to supply extra clarification and perception into why Meta profile penalties are carried out.

As a result of usually, it comes right down to misunderstandings, particularly on the subject of extra opaque materials.

As defined by the Oversight Board:

“Individuals usually inform us that Meta has eliminated posts to name consideration to hate speech supposed to sentence, ridicule, or elevate consciousness due to the lack of automated techniques (and generally human reviewers) to differentiate between such posts and hate speech. To deal with this, we Ask Meta to create a handy means for customers Point out of their software that their submit falls into one among these classes.“

In sure conditions, you’ll be able to see how meta’s extra binary definition of content material can result in misinterpretation. That is very true as meta depends extra on automated techniques to assist detection.

So, now you’ll Some recourse if you happen to deal a meta penalty, although you solely get one per 12 months. So it isn’t a giant change, however a useful one in sure contexts.